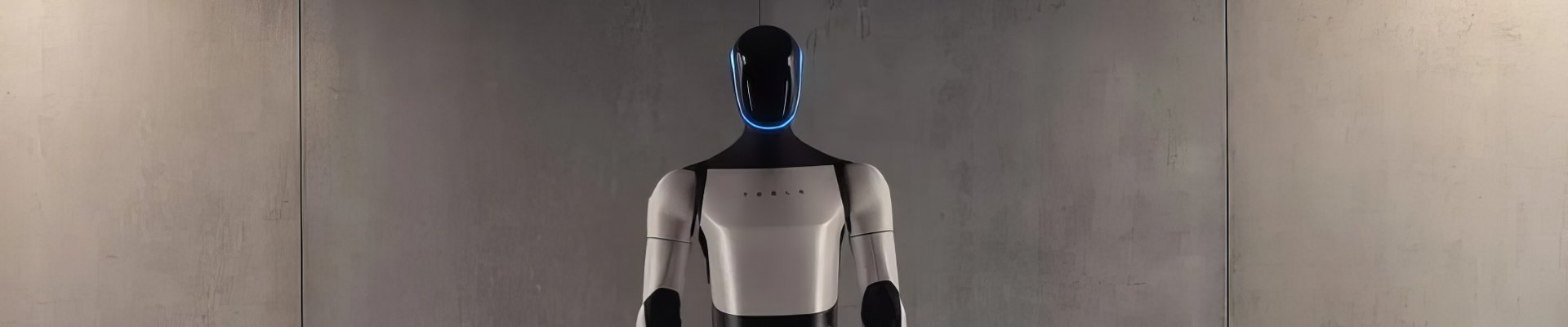

Humanoid Robots

Humanoid robots represent the frontier of robotics - machines designed to mimic human form and function. Unlike traditional robots, humanoids are built to operate in human-centric environments: warehouses, factories, hospitals, offices, and homes. Their value lies not in speed or strength alone, but in their ability to use existing infrastructure (doors, tools, equipment) without requiring the world to be redesigned around them.

Humanoid robots are essentially AI datacenters on legs, running real-time inference at the edge, offloading heavier cognitive loads to the cloud. Their battery limits (~2-3 kWh) mean energy efficiency in compute is just as critical as mechatronic efficiency. Humanoids are positioned to become the "iPhone moment" of robotics - not replacing all labor, but serving as a versatile platform for countless applications. As AI autonomy improves and costs decline, humanoids will shift from pilots and demos to fleet-scale deployments across logistics, manufacturing, and service industries.

See also Humanoid-AV Interoperability: Shared Semiconductors, Shared Autonomy

Hardware Stack (Typical)

- Powertrain: Lithium-ion or solid-state battery packs; ~2-3 hours runtime per charge.

- Actuators: Electric motors with harmonic drives for precise, quiet motion.

- Mobility: Bipedal locomotion (walking, stair climbing); some use wheels for efficiency.

- Manipulation: Multi-jointed hands with dexterous grip for tool use.

- Vision sensors: Stereo cameras, LiDAR, depth sensors.

- Tactile sensors: Force sensors in hands/feet.

- Balance sensors: IMUs, gyroscopes.

- Cooling & Safety: Passive/active cooling, joint torque limits, fall detection.

Battery & Power

Battery Size:- Currently ~2-3 kWh lithium-ion packs (roughly the size of an e-bike to small scooter battery).

- Provides ~2-5 hours of continuous operation depending on task load.

- Plug-in (standard AC/DC chargers, sometimes EVSE-adjacent).

- Hot-swappable packs in some models (e.g., Apptronik Apollo).

- Wireless charging pads are in R&D for autonomous docking.

- Prioritizes balance between locomotion, compute, and manipulation loads.

- Compute can draw 200-400 W alone when running LLM inference.

Compute Stack

- High-performance GPUs (NVIDIA Orin, Jetson, RTX-class), AI ASICs, or custom SoCs.

- Motor controllers (FPGA or MCU-based) for precise actuation.

- Safety microcontrollers for fall detection and emergency stop.

- Sensor fusion processors for IMUs, LiDAR, and tactile inputs.

Memory & Storage Stack

- RAM: 16-64 GB, sometimes LPDDR5 for low latency.

- Local Storage: 256 GB - 2 TB SSD for OS, control software, and local datasets.

- Edge Caches: Store pre-trained LLMs and reinforcement learning policies for offline operation.

Networking Stack

- Local: High-speed internal bus (CAN, EtherCAT, or custom real-time links).

- External: Wi-Fi 6/6E, 5G (private network for industrial deployments), Ethernet docked.

- Fleet/Cloud Integration: Telemetry, updates, and LLM access streamed via secure links.

LLMs & Agents:

- Uses cloud-connected large language models (GPT, Gemini, or proprietary).

- Can speak, listen, and respond in natural conversation.

- Often paired with text-to-speech (TTS) and speech-to-text (STT) engines for human interaction.

- Multi-modal perception + reasoning (CV + NLP).

- Can plan multi-step tasks, ask clarifying questions, and hand off to cloud LLM when needed.

- In industrial deployments, usually bounded by safety filters and task-specific constraints.